tl;dr: git config --global merge.conflictstyle diff3

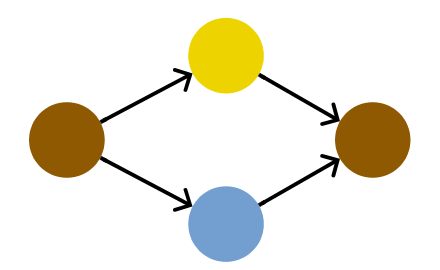

In my previous post, I preached about the one true way to merge MRs in a git workflow. The answer is obviously to rebase for conflicts, and a merge commit for posterity of the MR.

What I did not talk about is that there is a reason that pushes so many people to heresy. And that reason is fearing having to resolve merge conflicts!

The thing is that merge conflicts are really not hard to resolve when using the right method. But it’s not the default one. The default one looks like this:

Basically, you see the results of two different changes, and you have to guess how to make them come together. So you need to understand what each is doing. So many people see it as a painful task that they much prefer to have to do only once, with a single merge commit, instead of resolving conflicts commit after commit during a rebase.

But all of this is coming from the fact that you do not have enough information locally to resolve the conflict. What you would need to know is what each change was, not just its result. Thankfully, there is an option just for that in git! By setting conflict=diff3, your conflicts will look like this (example from the git documentation):

#! /usr/bin/env ruby

def hello

<<<<<<< ours

puts 'hola world'

||||||| base

puts 'hello world'

=======

puts 'hello mundo'

>>>>>>> theirs

end

hello()

Instead of having 2 versions of the puts line, we have 3. Since 3 > 2, it’s much better!

More seriously, the thing that is added by diff3 is the middle one, which corresponds to the original version. The versions above and below show you how it was changed.

So, from this, you can see at a glance that we are resolving a conflict between two changes:

- Change “hello” into “hola”

- Change “world” into “mundo”

As a human, we can deduce that the effect of these two changes is:

puts 'hola mundo'Thanks to this approach, you do not need to understand the rest of the branch. Most of the time, you can just look at a few lines of code and resolve the conflict almost mechanically. Not as nice as automatic resolution, but far from being insurmountable.

So set that setting globally, and fear merge conflicts no more!

git config --global merge.conflictstyle diff3